Ignoring the artwork side of things, game is a piece of software; and as serious engineers, we knew that we could not fall back into “it works on my machine”; or as is the case may be in Unreal Engine, “works on my machine in the Unreal Editor”. We needed to at least have an independent build and test pipeline; initially we wanted to only target 64bit Windows; but a long time ago, we found out that if we wanted to squeeze as much frame rate out of our game as possible on Steam Deck, we would also need to produce 64bit Linux versions. We didn’t want to do all of this work manually–works on my machine, anyone?–and we wanted all the creature comforts of a CI/CD platform. In short,

- detect changes in our Perforce source code repository

- checkout the latest changes

- produce Linux and Windows release builds

- test the builds

- deploy the builds on test machines

- report errors, manage built artefacts

Easy–in principle.

Where to run?

Cloud!–of course. But wait; as much we will spend money where necessary, we did measure how long it takes to build and test the game, arriving at the fastest times for CPU, GPU, and memory combinations per build, test, deploy cycle.

| Task | CPU [cores] | GPU [virt] | RAM [GiB] | I/O [GiB] | Network [GiB] | Time [m] |

|---|---|---|---|---|---|---|

| Build [W64 and L64] | 24 | 0 | 32 | 6 | 5 | 5 |

| Test [low, W64 and L64] | 4 | .2 | 8 | 0.8 | 0.2 | 6 |

| Test [med, W64 and L64] | 8 | .3 | 12 | 0.8 | 0.2 | 6 |

| Test [high, W64 and L64] | 16 | .5 | 16 | 0.8 | 0.2 | 6 |

| Deploy | 4 | 0 | 8 | 0.2 | 0.8 | 2 |

In total, we’re looking at four kinds of machines; except for the last deploy step, each machine’s runtime multiplied by 2: one for Windows and one for Linux. All in all, 10 minutes of build time; followed by 36 minutes of tests, and closing with 2 minutes of deployment; all in all, roughly 100 minutes of total compute time, roughly 25 minutes wall time. All of this load we tested on a Proxmox 8.1 running on AMD Ryzen 9 5950X machine with RX 6900 XT, 128 GiB RAM, NVMe-backed ZFS raid, and 10Gb networking. The build machines generated the most disk and network I/O; the other machines were fairly modest in their hardware usage. The build machines used up the most CPU time and consumed the most memory; the test machines we constrained to simulate our target performance, with Virt-IO virtualized GPU.

If we wanted to use AWS for these compute needs, we roughly mapped the required hardware to c5a.8xlarge, g5.xlarge, g5.2xlarge, g5.4xlarge instances; adding a build server, EBS, and a few other bits; we’d be looking at about £150 per month fixed cost; and then if we are super careful about keeping the machines running for only as long as strictly necessary, £1 per build. We want to see the build results as soon as changes are pushed in, so we didn’t want to batch, or only run one build every day; on an average day, we’d need to run 5 builds; when finishing up a sprint, even as many as 20. That puts us at about £10 per average day in builds; all in all, it’d all come to £300 a month.

Naturally, there’d be work beyond builds; we’d need to spin up ad-hoc machines to do gameplay testing, especially on different hardware configurations, on different networks to test network multiplayer, and no doubt more tasks that would add to the monthly bill. In total, we calculated the cloud-based solution to come to around £450 a month. Huh, £450 a month? And for things that really don’t need to be on the public Internet? We can have our own cloudground machine for less than a year of AWS bills.

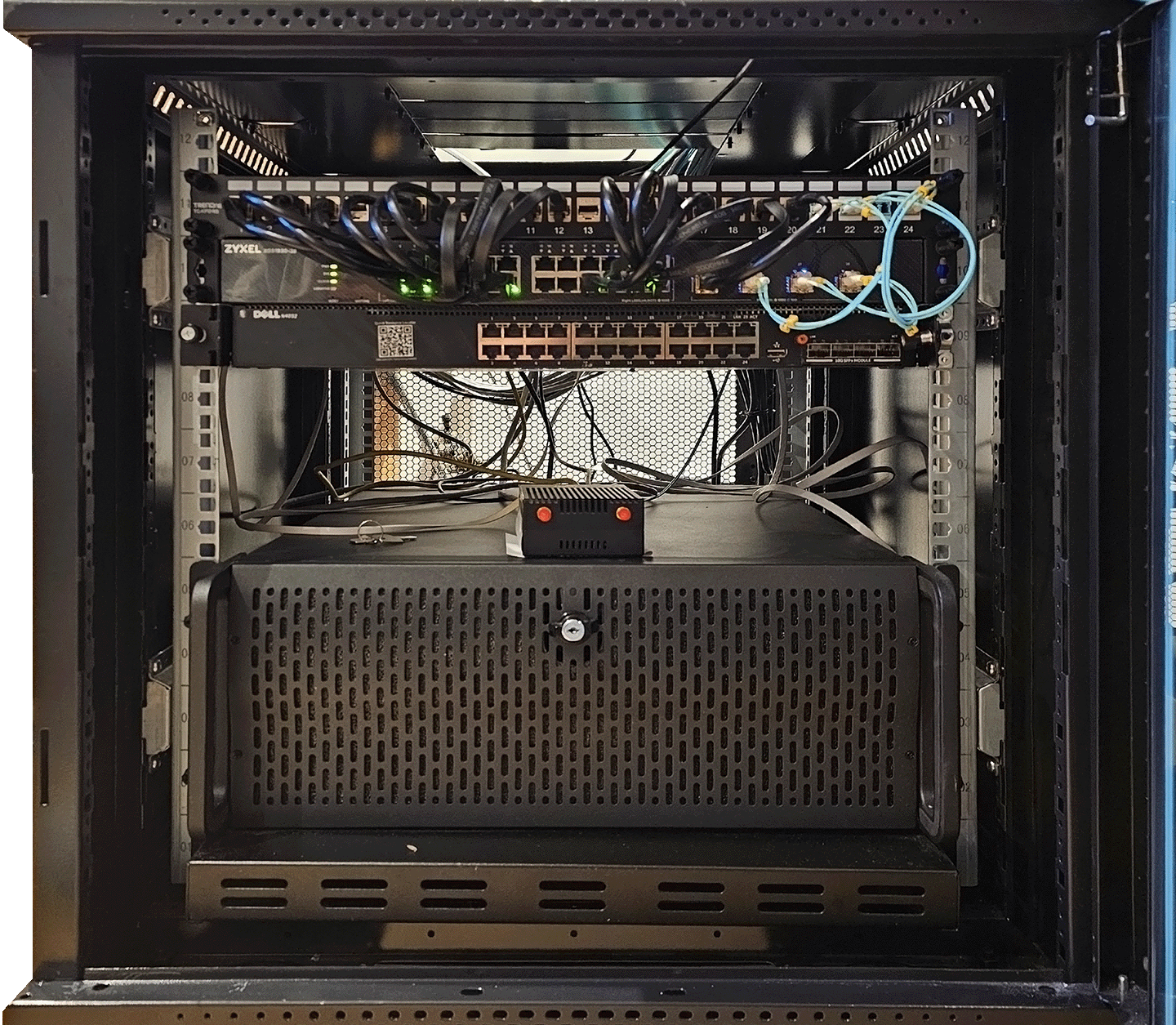

Enter masterchief and librarian

Based on the above super-optimistic calculations [we would probably forget to turn off the odd instance, we would probably need to add more storage, …], we decided to build our own hardware. We have a reliable 1 Gbps up and down internet connection, with static IPv4 address; and if we configure the machines carefully, they will only add approximately £20 to our (well, no my) monthly electricity bill. masterchief is an AMD Ryzen 9 5950X, RX 6900 XT, 128 GiB RAM, 3 × 2TiB + 1TiB NVMe, 2.5 Gbps and 10Gbps RJ45 networking; librarian is an Intel Pentium Silver N6005, 16 GiB RAM, 512 GiB NVMe storage, 3 × 2.5 Gbps RJ45 ports and 2 × 10 Gbps SFP+ ports for networking.

Don’t name your machines, they’re not pets! I know–as consolation, we only named the hypervisors, the machines they spin up do not have meaningful names. In any case both of them are much nicer in person!

With our masterchief server and librarian low-powered mini PC, we can get everything configured and installed and configured. Both masterchief and librarian run Proxmox [now at version 8.1]. In both cases, Proxmox is the bare metal virtualisation environment.

librarian is–amongst others–running pfSense and TeamCity. pfSense handles our network including firewall, VPN, DNS, DHCP; TeamCity is running JetBrains TeamCity Professional in an on premise installation. Importantly, librarian is always on. The TeamCity instance it runs wakes up and suspends masterchief as necessary. masterchief is the development, build, and test machine. The development tasks mainly involve network playability testing on different hardware and network conditions. Since version 8.1, Proxmox has production-ready support for software-defined network, we can easily test for various network conditions in network multiplayer scenarios.

Networking configuration is not too complex–there are separate VLANs for the Proxmox management network, the Dream on a Stick network, and the rest of the devices. We have no need to expose any of the services directly on the internet, so everything is nicely isolated.

Finally, the basic hypervisor setup shows the main operating modes: librarian runs 24 × 7; and it is just powerful enough to handle the tasks we throw at it; masterchief, on the other hand, runs only when needed and we make sure we do not let it idle; after all, it is our electricity bill and carbon footprint that makes an idle masterchief a very bad idea.

Leave a Reply

You must be logged in to post a comment.